This post details my adventures with the Linux virtual memory subsystem, and my discovery of a creative way to taunt the OOM (out of memory) killer by accumulating memory in the kernel, rather than in userspace.

Keep reading and you’ll learn:

- Internal details of the Linux kernel’s demand paging implementation

- How to exploit virtual memory to implement highly efficient sparse data structures

- What page tables are and how to calculate the memory overhead incurred by them

- A cute way to get killed by the OOM killer while appearing to consume very little memory (great for parties)

Note: Victor Michel wrote a great follow up to this post here.

Part 1: Demand paging is nuanced

As usual, the story begins with me asking questions about implementation details. This time, about the Linux kernel’s demand paging implementation.

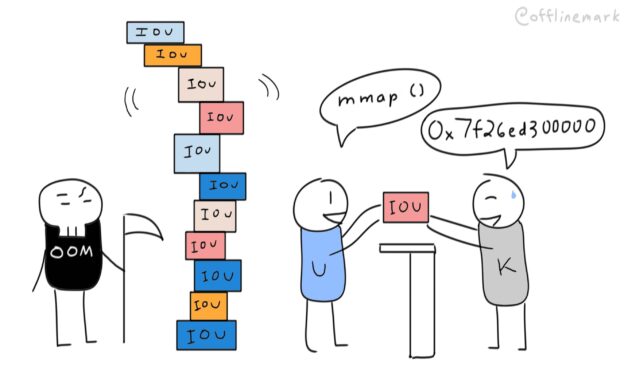

In Operating Systems 101 we learn that operating systems are “lazy” when they allocate memory to processes. When you mmap() an anonymous page, the kernel slyly returns a pointer immediately. It then waits until you trigger a page fault by “touching” that memory before doing the real memory allocation work. This is called “demand paging”.

This is efficient — if the memory is never touched, no physical memory is ever allocated. This also means you can allocate virtual memory in vast excess of what is physically available (“overcommit”), which can be useful.1. You just can’t touch it all.

Let’s dive deeper. Barring execution, “touching” memory means reading or writing. Writes to a new mmap’d region require the kernel to perform a full memory allocation. You need memory, you need it now, and the kernel can’t push it off any longer.

Now, the question: What about reads?

Unlike writes, reads to a new mmap’d region do not trigger a memory allocation. The kernel continues to push off the allocation by exploiting how new anonymous mappings must be zero initialized. Instead of allocating memory, the kernel services the page fault using the “zero page”: a pre-allocated page of physical memory, completely filled with zeros. In theory this is “free” — a single physical frame can back all zero-initialized pages.

The point? Demand paging is nuanced — not all ways of accessing a new mapping require the kernel to allocate memory.

Let’s see what this looks like in the source. The core page fault handler, handle_mm_fault is in mm/memory.c. A few calls deep via __handle_mm_fault and handle_pte_fault, we hit this block:

static vm_fault_t handle_pte_fault(struct vm_fault *vmf)

{

// ...

if (!vmf->pte) {

if (vma_is_anonymous(vmf->vma))

return do_anonymous_page(vmf);

else

// ...

}

// ...

}Which leads us to do_anonymous_page — the core page fault handler for anonymous pages.

static vm_fault_t do_anonymous_page(struct vm_fault *vmf)

{

// ...

if (pte_alloc(vma->vm_mm, vmf->pmd))

return VM_FAULT_OOM;

// ...

/* Use the zero-page for reads */

if (!(vmf->flags & FAULT_FLAG_WRITE) && // (1)

!mm_forbids_zeropage(vma->vm_mm)) {

entry = pte_mkspecial(pfn_pte(my_zero_pfn(vmf->address), // (2)

vma->vm_page_prot));

vmf->pte = pte_offset_map_lock(vma->vm_mm, vmf->pmd,

vmf->address, &vmf->ptl);

// ...

goto setpte;

}

// ...

setpte:

set_pte_at(vma->vm_mm, vmf->address, vmf->pte, entry); // (3)

// ...

return ret;

// ...

}Bingo. It checks whether a read caused the fault (1), then maps the virtual page to the zero page (2 and 3).

Note that this all happens in the page fault handler, which is an implementation choice. The mmap core logic does not touch the page tables at all, and only records the presence of the new mapping. It leaves the mapping’s page table entry non-present (present bit = 0) which will trigger a page fault on access.

Alternatively, mmap could proactively allocate the page table entry, and initialize it to the zero page. This would avoid a page fault on the first read, but at the cost of initializing (potentially many) page table entries up front. Given that it’s most efficient to be maximally lazy, the current implementation is best.

Part 2: Allocating infinite memory, and touching it too

This led me to another question. Since reads from anonymous mappings are “free”, in addition to allocating excessive virtual memory, can’t you actually touch all of it? As long as that “touch” is a read?

Time for an experiment. Here’s some code that allocates 100 GB of linear memory, and tries to read from the first byte of each page. It allocates 512 MB at a time because I incorrectly thought you couldn’t mmap 100GB at once you can’t directly ask mmap for 100 GB 🙂.23 My test system was a x64 Ubuntu 20.04 VPS.

#include <sys/mman.h>

#include <iostream>

const size_t MB = 1024 * 1024;

const size_t GB = MB * 1024;

int main() {

size_t alloc_size = 512 * MB;

size_t total_alloc = 100 * GB;

size_t num_allocs = total_alloc / alloc_size;

// std::cout << "alloc_size (MB)" << alloc_size / (1024*1024)<< "\n";

// std::cout << "total_alloc " << total_alloc << "\n";

// std::cout << "num_allocs " << num_allocs << "\n";

std::cout << "Allocating mem...\n";

char* base = nullptr;

// Allocate a ton of memory

for (size_t i = 0; i < num_allocs; i++) {

// Unsound alert - assuming allocations are contiguous and grow down.

base = (char*)mmap(NULL, alloc_size, PROT_READ, MAP_PRIVATE | MAP_ANONYMOUS, -1, 0);

if (base == MAP_FAILED) {

perror(NULL);

throw std::runtime_error("Fail");

}

std::cout << (void*)base << " " << i << "\n";

}

std::cout << "Allocated Virtual Mem (GB): " << total_alloc / GB << "\n";

std::cout << "Base Addr: " << (void*)base << "\n";

std::cout << "Press enter to start reading.\n";

getchar();

std::cout << "Reading each page...\n";

// Read the first byte of each page

for (size_t i = 0; i < total_alloc; i += 0x1000) {

auto x = base[i];

}

std::cout << "Done!\n";

getchar();

}When we run it, here’s what we see:

$ ./demo

Allocating mem...

0x7f3f6d300000 1

0x7f3f4d300000 2

0x7f3f2d300000 3

...

0x7f26cd300000 198

0x7f26ad300000 199

0x7f268d300000 200

Allocated Virtual Mem (GB): 100

Base Addr: 0x7f268d300000

Press enter to start reading.It successfully allocated 100 GB of linear virtual memory in 512 MB chunks. We can confirm this with pmap, which shows a 100GB region of anonymous virtual memory at the base address printed.

$ pmap `pidof demo`

485209: ./demo

00005600e1d0c000 4K r---- demo

00005600e1d0d000 4K r-x-- demo

00005600e1d0e000 4K r---- demo

00005600e1d0f000 4K r---- demo

00005600e1d10000 4K rw--- demo

00005600e2a47000 132K rw--- [ anon ]

00007f268d300000 104857600K r---- [ anon ] <<<< 100 GB region

00007f3f8d300000 16K rw--- [ anon ]

00007f3f8d304000 60K r---- libm-2.31.so

00007f3f8d313000 668K r-x-- libm-2.31.so

...What does htop say?

htop confirms that 100 GB of virtual memory is allocated (VIRT column), but a much more reasonable 1540 KB of resident memory (RES) is actually occupying RAM. Note the MINFLT column — this is the number of “minor” page faults that have occurred. A minor page fault is one that does not require loading from disk. We will be triggering lots of these and should expect to see this number grow dramatically.

Here’s what happens after I press enter to trigger reading.

0x7f26cd300000 198

0x7f26ad300000 199

0x7f268d300000 200

Allocated Virtual Mem (GB): 100

Base Addr: 0x7f268d300000

Press enter to start reading.

Reading each page...

Done!The process hits the “Done!” print. This means it successfully touched every page of the 100 GB allocation!

htop confirms that many minor faults have occurred. We would expect it to cause exactly 26214400 faults (100 GB / 4 KB), and indeed, 26214552 – 26214400 = 152, the number we started with. Intriguingly, the resident memory appears to have also increased, which should not have happened. See the Appendix A for discussion of this.

Here’s a video to see it all in action:

So the theory is confirmed! You can apparently “Allocate your memory, and touch it too” (as long as that touch is a read).4

If your application, for some reason, benefits from the ability to have a 100 GB array of zeros then this is perfect for you. What about the rest of us?

A closer-to-real-life application is a sparse array. A sparse array is a (typically very large) array, whose elements are mostly zero. By exploiting demand paging, you can implement a memory efficient sparse array, where the majority of the array is backed by the zero page (or not even mapped). You get the fast indexing benefits of an array while avoiding the memory overhead.

Part 3: Playing with a killer

I have a confession. Remember when I said not all ways of accessing memory require the kernel to allocate memory? Yeah, that’s a lie.

Even though a read can be serviced by the shared zero page, that doesn’t mean no memory is allocated. Even the process of mapping a zero page requires allocating memory. And here’s where we get into the nitty gritty.

The hidden overhead of page tables

The overhead comes from the virtual memory infrastructure itself — the page tables. Page tables are data structures that power the virtual memory subsystem. Like regular data structures, they occupy memory, only their overhead is easy to overlook, since it’s hidden from userspace.

This is what page tables on x86_64 look like:

They’re a tree data structure that is 4 levels deep, with each node (table) being an array of 512 8-byte entries. Together these tables offer an efficient way to represent a mapping between every virtual page in the address space and a physical frame.

Here’s where the overhead comes from. Each page touched requires 1 Page Table Entry (PTE) to be allocated. However, PTEs aren’t allocated individually. They’re allocated in blocks of 512 called Page Tables. Each Page Table requires 1 Page Directory Entry to be allocated. But Page Directory Entries aren’t allocated individually either, they’re allocated in blocks of 512 called Page Directories. This propagates all the way up to the top level of the tree: the PML4 Table (“Page Map Level 4” Table). There is only one PML4 Table and it’s pointed to by the CPU’s CR3 register.

Note that while “Page Table” refers to a specific type of table, these tables are all colloquially referred to as “page tables”.

Since page table entries are 8 bytes, and all page tables contain 512 entries, it follows that page tables are 4096 bytes. This doesn’t seem like much, but to map even one page, you need one of each table. That’s already 16 KB of overhead. If you’re mapping gigabytes of virtual address space, this overhead will add up.

Intentionally trying to get killed

This leads us to the final question(s). Specifically, how much can page table overhead add up to? Is it possible for page tables to occupy some non-negligible portion of memory? Is it possible to exhaust memory, purely from page tables? How much virtual memory would we need to map to do so?

Here’s pseudocode to calculate the page table overhead from a virtual memory allocation.

Input: virtual_pages_allocated

page_table_entries = virtual_pages_allocated

page_tables = CEILING(page_table_entries, 512) / 512

page_tables_bytes = page_tables * 4096

page_dirs = CEILING(page_tables, 512) / 512

page_dirs_bytes = page_dirs * 4096

pdp_tables = CEILING(page_dirs, 512) / 512

pdp_tables_bytes = pdp_tables * 4096

pml4_table = 1

pml4_table_bytes = 1 * 4096

Output: total_overhead = page_tables_bytes + page_dirs_bytes + \

pdp_tables_bytes + pml4_table_bytesRemember that tables are allocated as a whole. Even if a table is partially filled, it still occupies the full 4 KB. This is why the calculation needs to round up to the nearest multiple of 512 (expressed as the CEILING function from Excel).

Using this method, we calculate that a 512 GB allocation of virtual memory requires slightly over 1 GB of page tables!

VM Alloc GB 512

Bytes Alloc 549755813888

Pages Alloc 134217728

PTEs 134217728

Page Tables 2621445

Page Tables Bytes 1073741824

Page Dirs 512

Page Dirs Bytes 2097152

PDP Tables 1

PDP Tables Bytes 4096

PML4 Tables 1

PML4 Tables Bytes 4096

Total Overhead Bytes 1075847168

Total Overhead GB 1.001960754Here’s a spreadsheet so you can play with the numbers yourself: https://docs.google.com/spreadsheets/d/10h8sSvocjI-Fu6NW7B3jv1C3NGoflgaGTp1HGpkV1e4/edit?usp=sharing

My machine only has 1 GB of memory, so this should be more than enough to exhaust memory and ideally, trigger the OOM killer.

Time for another experiment! I changed the code above to allocate 512 GB instead of 100 GB, and reran it.

Here’s the output:

...

0x7eca126ba000 1022

0x7ec9f26ba000 1023

0x7ec9d26ba000 1024

Allocated Virtual Mem (GB): 512

Base Addr: 0x7ec9d26ba000

Press enter to start reading.

Reading each page...

fish: './demo2' terminated by signal SIGKILL (Forced quit)It worked! After a few minutes, memory was exhausted, and the OOM killer killed the process.

Here’s a screenshot right before the process is killed. Points of interest:

- System memory meter close to maxed out: 893 MB of 981 MB used.

- Resident memory remains extremely low despite the OOM score being extremely high. This shows that page table overhead does not affect resident memory.

- Minor faults are orders of magnitude greater than anything else on the system.

- The bottom section shows the

VmPTEfield of/proc/*/status, confirming that close to 800MB of memory is used by page tables (close to physical memory limit).

The pictures are nice, but it’s much more exciting to see it happen live.

Note that the code performs all the mmaps first, then touches the memory. This is intentional. An alternate approach where it touches the mapping immediately after mmap’ing won’t work because mmap will eventually fail. This will prevent us from allocating more memory and pushing the system to its limit.

When we mmap everything up front and then touch the pages, we take advantage of overcommit, which will allow mmaps to succeed long after physical memory limits would have been bypassed. We then exploit the fact that a valid memory access has no way to “fail” — page faults must be handled.

An old friend

With a sufficiently quiet system (and some luck), it’s possible to get this stack trace in the OOM killer’s logging5:

This is the kernel stack trace of the allocation that triggered the OOM kill. In the middle of the trace, we find familiar functions: handle_mm_fault, and do_anonymous_page. This is the page fault handler for anonymous pages, which we saw earlier.

This trace shows that the final allocation that triggered the OOM killer was our own attempt at mapping another zero page — an operation that’s “free” in theory, but can get you OOM killed in practice.

Conclusion

If you look closely enough at demand paging you’ll find nuance. Writes obviously trigger allocations, but reads can be efficiently serviced by the shared zero page for “free”.

This enables applications to allocate vast amounts of virtual address space and access all of it!.. as long as they only want to read (zeros). This can be applied practically with sparse data structures, whose elements are mostly zero.

That said, the eager virtual-memory-savvy programmer should be warned — while invisible to userspace, “free” zero page mappings are not free in practice, and can incur substantial memory overhead from the virtual memory infrastructure itself.

Page tables are just one of several sources of system memory overhead (per-thread kernel stacks being another example). Usually application developers can safely ignore these, but I hope this article offers a glimpse into the world of systems developers whose responsibility it is to provide that safety.

Learn something new? Let me know!

Did you learn something from this post? I’d love to hear what it was — tweet me @offlinemark!

I also have a low-traffic mailing list if you’d like to hear about new writing. Sign up here:

Acknowledgements

Thanks to Jann Horn for help understanding the resident memory spike. Thanks to Jake Miller, Roderic Morris, James Larisch, and William Woodruff for reviewing earlier drafts of this post.

Discussion

Discussions on: twitter, reddit, Hacker News, lobsters.

Appendix A: Mysterious resident memory spike

Summary: if you need to measure resident memory usage with high accuracy, do not use htop (or /proc/*/{statm,status}). Use /proc/*/smaps or /proc/*/smaps_rollup instead.

In these experiments, a mysterious 1.5 MB spike in resident memory appears while triggering reads of all the pages. This should not be the case — as described above, faulting zero pages only allocates page tables, which don’t count toward resident memory. The resident memory reported immediately before performing the reads should stay constant for the remainder of execution.

I was mystified, and convinced that I found a kernel bug. I confirmed that there is nothing happening in userspace that would cause this — the page faulting loop has a single memory access instruction in its body. Single stepping confirms that this instruction alone triggers the spike. I also confirmed that the source of htop’s data, /proc/*/statm, shows this spike also, so it’s not a htop bug.

To my disappointment, it turns out that this isn’t a bug. (Thanks to Jann Horn for showing me this.)

Different files in /proc offer varying levels of accuracy. This is not documented in the man page, but is mentioned in the kernel’s internal documentation and this stack overflow post. htop receives memory statistics information from /proc/*/statm which intentionally offers a lower accuracy measurement, in exchange for better multicore performance. To avoid lock contention, the kernel records memory usage in per-thread caches, and then synchronizes those with the process-wide cache after every 64 page faults.

So, the “spike” I was observing is not actually a spike — it’s the synchronization threshold being hit, which updates the process-wide information in /proc/*/statm. The resident memory is actually always at 3 MB, it’s just that the reporting layer takes some time to get updated because my program happens to stop on a getchar() in the middle of the 64 fault cycle.

If you want high accuracy memory information, use /proc/*/smaps and /proc/*/smaps_rollup. At the expense of performance, these files offer higher accuracy because their implementations walk internal data structures, rather than using caches.

A lingering mystery remains. Can you help?

A small mystery remains: if the statistics sync every 64 page faults, how can 1.5 MB of error accumulate? Syncing every 64 faults suggests that the maximum error would be 4 KB * 63 faults = 252 KB of error.

Both Jann and I are stumped by this. If you can help explain this, please contact me. LKML discussion here.

Update: Victor Michel has answered this on Twitter and attributes this to late accounting of file mappings that occurs on first sync. Thanks Victor!

- Some examples of applications that allocate immense virtual memory are Address Sanitizer and Webkit.

- Thanks to @damageboy for pointing this out.

- This is lazy, dirty research code :) It is not sound or portable, and only just works because Linux happens to place the mmaps contiguously, growing down in memory.

- Or if you prefer, the famous Henry Ford quote: “You can touch as much memory as you want, as long as you are only reading”.

- Fun fact! I found a bug/issue while looking through this logging which led to my first contribution to Linux. More details on Twitter.

Pingback: What they don’t tell you about demand paging in school – Hacker News Robot

Pingback: What they don’t tell you about demand paging in school – offlinemark – Library Project: Epilogue

Pingback: How do you create a very large sparse array in Windows? - OiO.lk

Pingback: 在学校里他们没有告诉你的需求分页 - 偏执的码农